Beyond "Generated with AI"

Introducing the AI Collaboration Trace (ACT) – A More Nuanced Way to Acknowledge Your AI Thought Partner

As an educator and leader deeply immersed in the intersection of AI and learning, I’ve been reflecting a lot on a growing challenge: How do we authentically and transparently acknowledge the role AI plays in our creative and intellectual work? Many of us are now using AI not just as a simple tool, but as a dynamic thought partner. Yet, our methods for giving credit often feel… well, a bit flat.

A simple footer stating "Generated with AI" or "Assisted by ChatGPT" barely scratches the surface of these complex, iterative collaborations. It doesn't capture the back-and-forth, the prompts, the refinements, or, crucially, the human insight that guides the process. If we're truly partnering with these powerful tools, shouldn't our way of talking about that partnership be more nuanced? Frankly, there's a hesitation for many to even share that they "used AI" because the phrase is so maddeningly vague. Did you just ask Gemini to write your entire report while you kicked back with a coffee (we've all seen those cartoons!), or did you engage in a deep, iterative dialogue, leveraging AI tools to support and push your thinking and create something far more sophisticated than you might have alone? That ambiguity is exactly what we need to move past.

I’ve been experimenting with different possible strategies to address this challenge. My most recent solution is one that I’ve found helpful enough to share and hopefully others can critique and riff off of. It’s a framework that I call the AI Collaboration Trace (ACT). It’s designed to move us beyond simplistic acknowledgments and towards a more robust, transparent documentation of the human-AI creative workflow.

What is the AI Collaboration Trace (ACT)?

At its heart, the ACT is a structured method for detailing how you collaborated with an AI on a specific piece of work. Think of it less like a traditional citation for a static source and more like a lab notebook or a project diary that outlines the key steps in your collaborative journey with an AI. It’s about showcasing the process, the inputs (both yours and the AI's), and the iterative refinements that led to the final output.

The goal isn't just to say "AI was here," but to provide a clear and honest account of the AI's role and, just as importantly, your own intellectual contributions in guiding and shaping the work.

Why ACT? The Limits of "AI Assisted" Labels

You've likely seen the disclaimers. While they signal AI involvement, they often fall short for several reasons:

They lack specificity: Which AI? What was it asked to do? What kind of output did it provide?

They don't reflect the iterative process: Many of us don't just get a perfect answer on the first try. We prompt, the AI responds, we refine our prompt, the AI responds again, and so on. This dialogue is where much of the "thought partnership" happens.

They can inadvertently diminish the human role: A generic label might imply the AI did most of the heavy lifting, when in reality, the human user is often the architect of the inquiry, the critical evaluator of outputs, and the integrator of information.

They don't help others understand the methodology: For academic or research purposes, knowing how AI contributed can be as important as knowing that it contributed.

The ACT framework aims to address these shortcomings by providing a more detailed and process-oriented approach. It’s about making the "black box" of AI interaction a little more transparent.

How the ACT Works: Deconstructing the Trace

The philosophy behind ACT is to acknowledge AI as a collaborative partner. It’s structured to capture the key elements of this partnership. An ACT disclosure would be an appendix or a supplementary document. It's composed of a few key parts:

Preamble:

A brief introductory sentence affirming the use of AI as a thought partner and introducing the ACT.

Example: "This article was developed with AI as a thought partner. The following AI Collaboration Trace (ACT) details the iterative process..."

Overall Interaction Summary:

This section provides the high-level context:

AI Platform(s) Utilized: (e.g., Google Gemini, OpenAI ChatGPT)

Primary Model(s) Engaged: (e.g., Gemini 2.5 Pro, GPT-o3)

Date(s) of Interaction: (e.g., June 1, 2025 – June 3, 2025)

(Optional) Session Identifier(s): (e.g., Conversation IDs or share links, if available and appropriate)

Interaction Cycles (The Core of the ACT):

This is where the detailed collaboration is documented. Each "cycle" represents a significant turn or segment in your interaction with the AI. You'd create an entry for each meaningful cycle.

Each cycle includes:

Purpose of Cycle: What were you trying to achieve in this specific interaction? (e.g., Ideation for topic X, Summarization of uploaded document Y, Drafting an introduction, Refining arguments).

User Input (U):

Prompt: Your specific question, instruction, or prompt to the AI.

Attached Data/Context: Any files you uploaded, text you pasted, or specific prior context you directed the AI to consider.

AI Configuration (AI-C):

AI Model/Type: The category of AI model used (e.g., General Conversational Model, Reasoning & Problem Solving Model, Specialized Model for code/images).

Key Settings/Tools Leveraged: Any specific AI settings or tools used (e.g., Web access enabled, Code interpreter used, Specific plugin activated).

AI Output (AI-O):

Nature & Summary: A description of what the AI provided in response.

(Optional) Key Contribution/Excerpt: A particularly pivotal idea, phrase, or piece of information from the AI's output.

Reflexive Element & Workflow Progression (R): This is a crucial part. How did the AI's output influence your thinking? How did it refine your ideas or direct your next steps? This highlights the human-AI interplay and your role in the process.

Final Output Derivation Statement:

A concluding summary of how the sum of these AI interactions contributed to the final piece of work.

Example: "The AI's contributions, as detailed in the cycles above, were instrumental in brainstorming initial concepts, structuring the argument, and refining language, while the core research and final articulation remained my own."

Putting ACT into Practice: Tools to Make it Easier

Now, you might be thinking, "That sounds like a lot of documentation!" And you're right, it can be if you're doing it all manually from scratch. But, remember, we have access to AI. Using Google Gemini 2.5 Pro, I've developed a couple of tools to streamline this process.

The ACT Generator Tool:

I created a simple, interactive web tool (you might have seen me share an earlier version if you're a long-time reader!). You can find it here.

How it helps:

It provides fillable fields for all sections of the ACT.

It uses dropdowns for common choices (like "Purpose of Cycle" or "AI Model/Type") to speed things up.

You can dynamically add as many "Interaction Cycles" as you need.

Once you've entered your information, it generates a clearly formatted ACT text that you can copy and paste into your work.

AI-Assisted ACT Generation (Using a Special Prompt):

Why not use the AI itself to help document the collaboration? I've crafted a detailed Markdown prompt that you can give to the AI you're working with (like Google Gemini, ChatGPT, etc.). You can find the full prompt below.

How it works:

You copy this special prompt (see the prompt below) and paste it into an AI chat. This has to be the actual chat that you are attempting to cite the use of. If you are using multiple tools, that’s okay! Just use the prompt in each chat that you want to include in your ACT citation.

The prompt explains the ACT framework to the AI and asks it to review your current conversation history.

The AI then attempts to identify the distinct "Interaction Cycles" and draft them in the correct ACT format, pulling out your prompts and its responses.

Your role is still key: The AI can do a decent job of identifying inputs and outputs, but you'll likely need to review, refine, and especially elaborate on the "Purpose of Cycle" and the "Reflexive Element & Workflow Progression," as these require your unique insight into your own thought process.

Using these tools in conjunction can significantly lower the barrier to creating a comprehensive ACT. The AI helps draft the cycles, and the generator tool helps structure everything and even offers AI-powered suggestions for reflection.

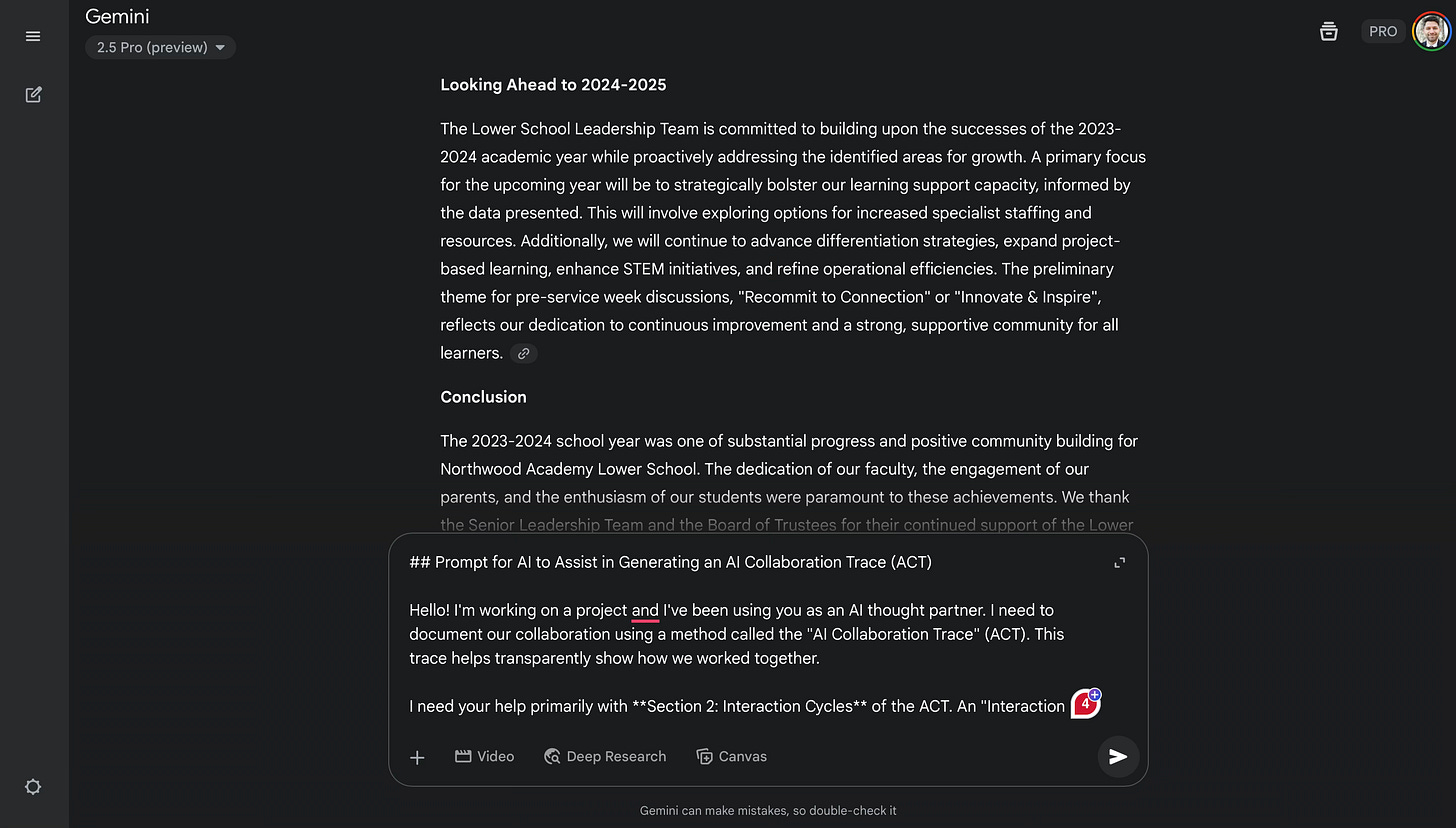

Special ACT Prompt

## Prompt for AI to Assist in Generating an AI Collaboration Trace (ACT)

Hello! I'm working on a project and I've been using you as an AI thought partner. I need to document our collaboration using a method called the "AI Collaboration Trace" (ACT). This trace helps transparently show how we worked together.

I need your help primarily with **Section 2: Interaction Cycles** of the ACT. An "Interaction Cycle" is defined as a segment of our conversation that typically includes:

1. **Purpose of Cycle:** The main goal I was trying to achieve with my prompt(s) in this segment (e.g., ideation, summarization, knowledge gathering, drafting, refining, problem-solving).

2. **User Input (U):**

* **Prompt:** My specific question or instruction to you.

* **Attached Data/Context:** Any documents, previous text, or specific information I asked you to consider.

3. **AI Configuration (AI-C):**

* **AI Model/Type:** The type of model I was interacting with (e.g., General Conversational, Reasoning & Problem Solving, Research & Summarization, Specialized Model, Fine-tuned Model). If you know your specific model type or capabilities you used for a response, please note it.

* **Key Settings/Tools Leveraged:** Any specific settings I might have indicated or tools you used (e.g., web access, code execution, document analysis, image generation).

4. **AI Output (AI-O):**

* **Nature & Summary:** A description of your response and its key elements.

* **(Optional) Key Contribution/Excerpt:** Any specific part of your response that was particularly important or pivotal.

5. **Reflexive Element & Workflow Progression (R):** How your output influenced my thinking, refined my ideas, or directed my next actions in our collaboration.

**Your Task:**

Please review our current conversation history (or a provided transcript segment, if I give you one). Based on this history, please help me identify and structure distinct "Interaction Cycles."

For each cycle you identify, please try to extract or infer the information for the five points listed above (Purpose, User Input, AI Configuration, AI Output, Reflexive Element).

**Output Format for Each Interaction Cycle:**

Please format each identified cycle like this:

```

Interaction Cycle #[Number]:

- Purpose of Cycle: [Your best understanding of my goal for this cycle]

- User Input (U):

- Prompt: "[My prompt to you]"

- Attached Data/Context: [Any data/context I provided]

- AI Configuration (AI-C):

- AI Model/Type: [Your model type or capabilities used]

- Key Settings/Tools Leveraged: [Any tools/settings you used or I specified]

- AI Output (AI-O):

- Nature & Summary: [Summary of your response]

- Key Contribution/Excerpt: "[A pivotal part of your response, if applicable]"

- Reflexive Element & Workflow Progression (R): [How your response likely influenced my next steps or thinking – you may need to make an educated guess or ask me to fill this in if it's not obvious]

```

**Information for the Full ACT (I will provide this, or you can use placeholders):**

To help you understand the full context, the ACT also includes:

* **Preamble:** (e.g., "This work was developed with AI as a thought partner...")

* **1. OVERALL INTERACTION SUMMARY:**

* AI Platform(s) Utilized: [e.g., Google Gemini]

* Primary Model(s) Engaged: [e.g., Gemini 1.5 Pro]

* Date(s) of Interaction: [Date of our conversation]

* Session Identifier(s): [If available, like a share link/conversation ID]

* **3. FINAL OUTPUT DERIVATION STATEMENT:** (e.g., "The AI's contributions were instrumental in...")

**Let's start by focusing on identifying and outlining the "Interaction Cycles" from our conversation.** If you can identify several cycles, please list them out. I can then review, edit, and add any missing details, especially for the "Reflexive Element."

If you are able to, after identifying the cycles, I might ask you to assemble the full ACT report using the information I provide for the other sections.

Thank you for helping me with this documentation!How About An Example?

Sure thing! I’ll work through a very simple, fictional, but plausible example. All of the data that I will use in this example is fictional data from a fictional school.

Let’s suppose that I am the Lower School Division Head for a fictional school called Northwood Academy. It’s the end of the school year, and I want to create an End-of-Year report for our senior leadership team and our board, highlighting the wins and the challenges that we experienced this school year.

I could start from scratch, but my lower school leadership team takes detailed meeting minutes during our weekly leadership team meeting. I know that hidden within those minutes are many of the key moments and themes that I would like to highlight.

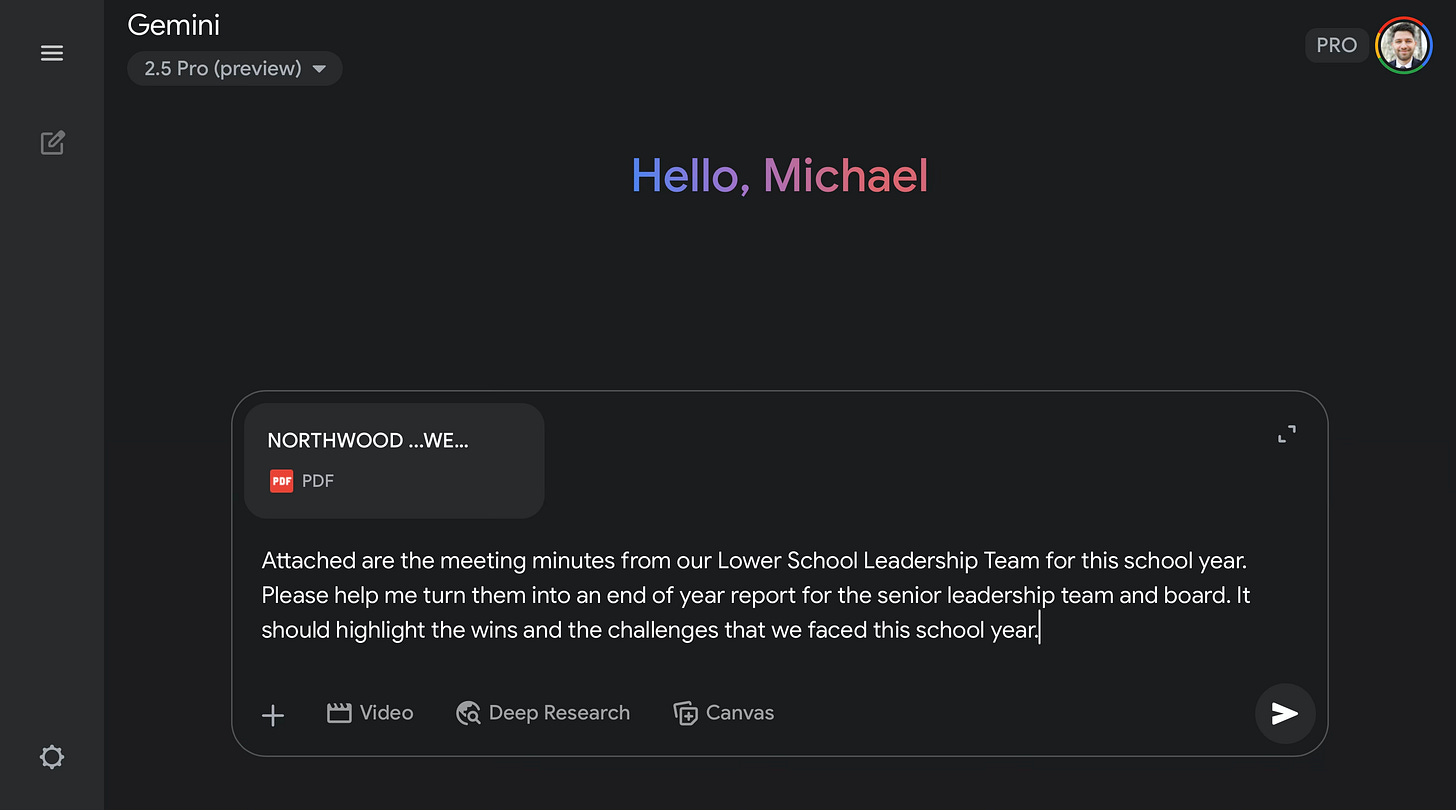

So, to help me get started, I decide to feed my meeting notes to AI with a prompt requesting help with my task.

Attached are the meeting minutes from our Lower School Leadership Team for this school year. Please help me turn them into an end of year report for the senior leadership team and board. It should highlight the wins and the challenges that we faced this school year.

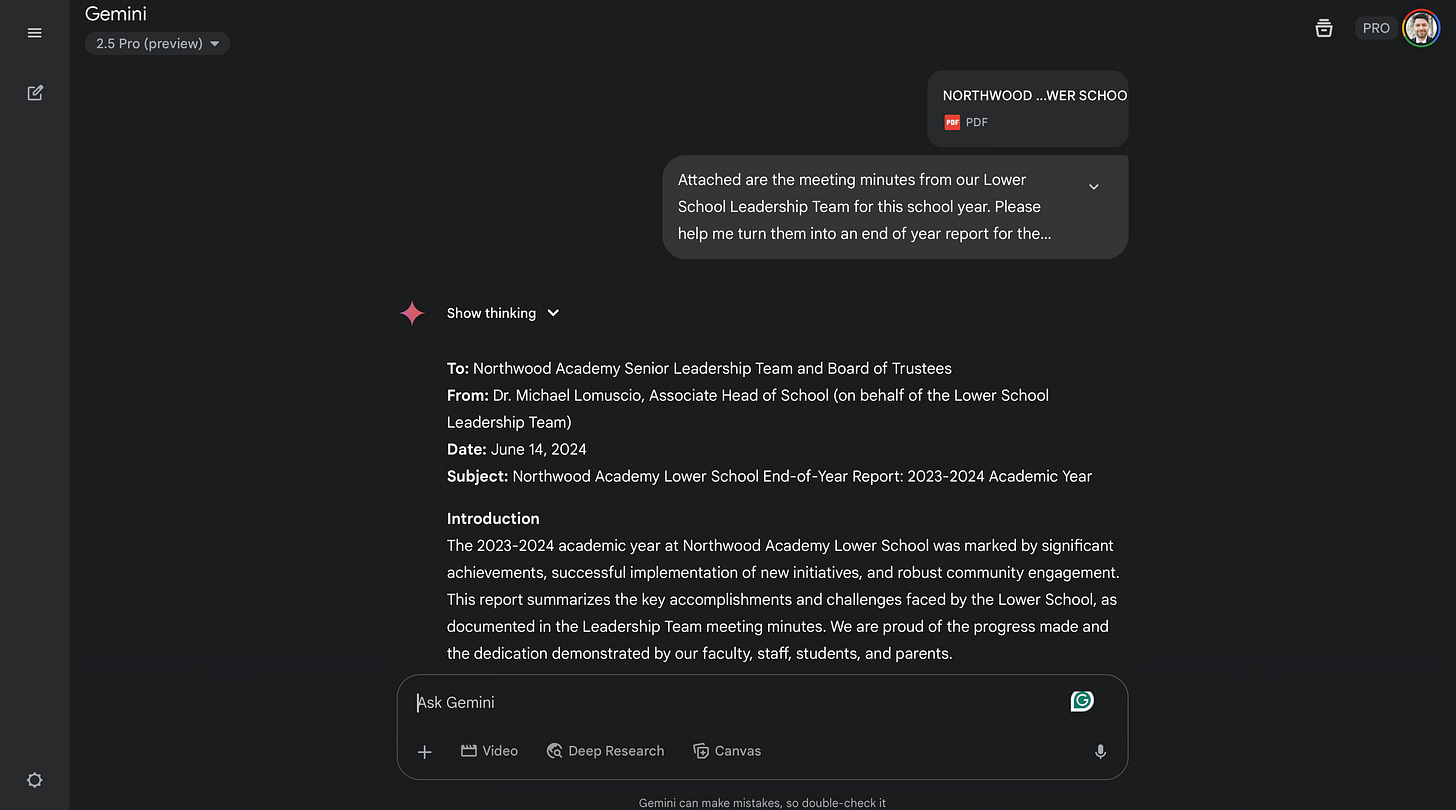

Google Gemini gets to work analyzing the meeting minutes and extracting themes. When it’s done, it produces a first draft of my report.

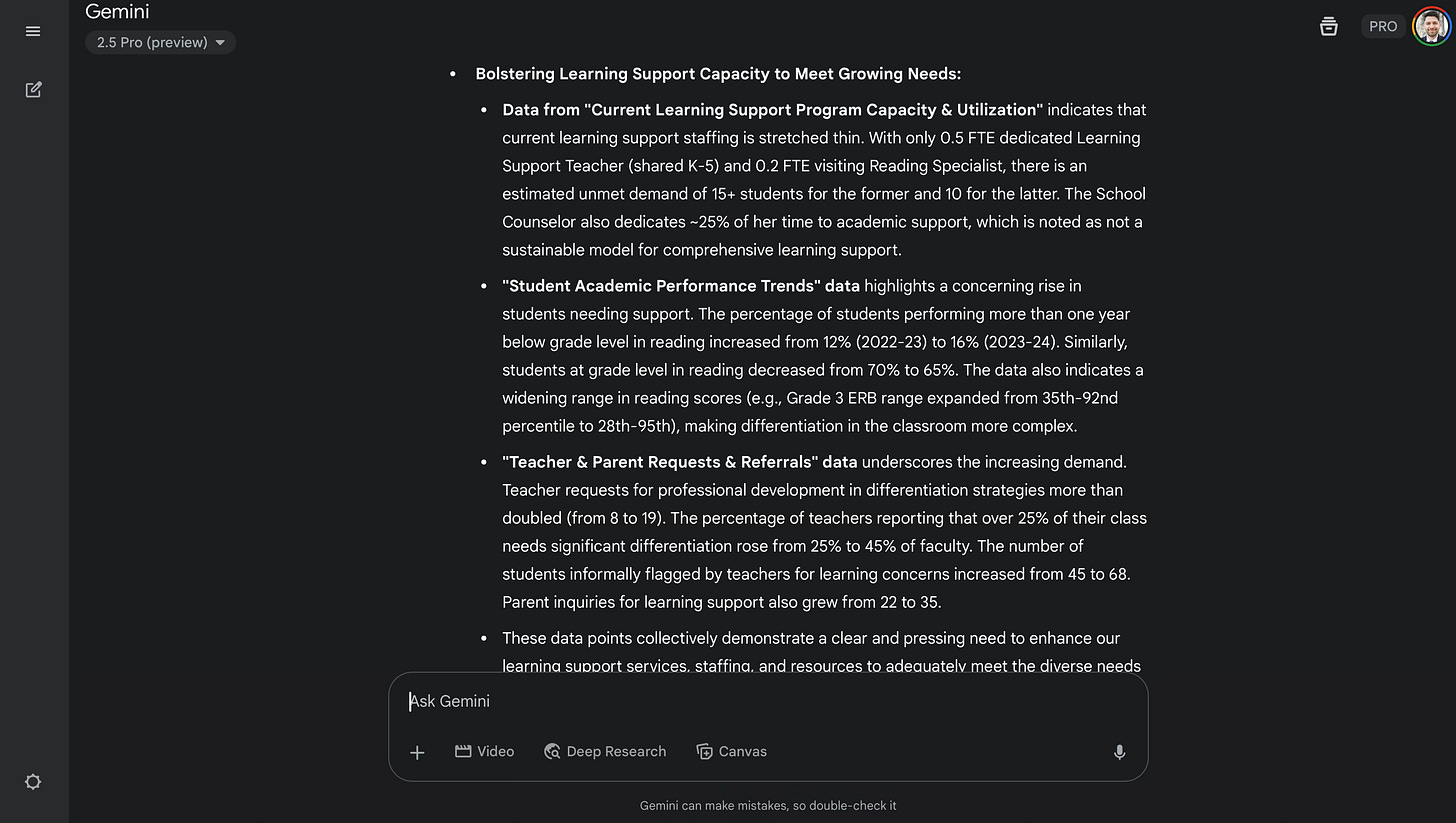

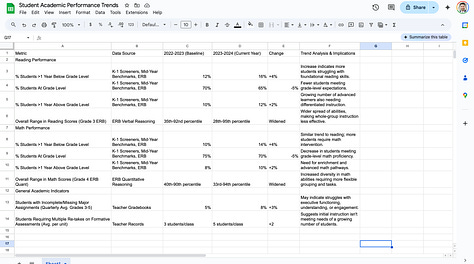

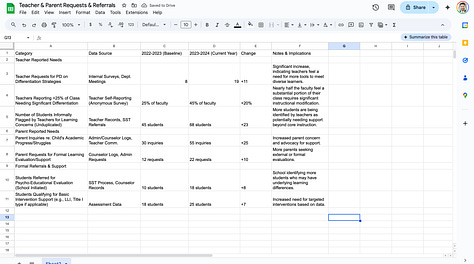

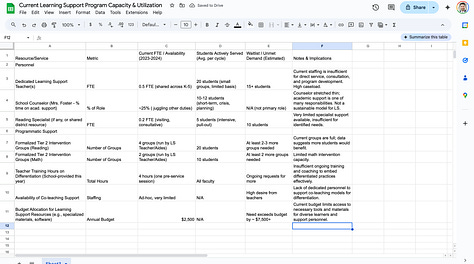

This draft is decent! I notice that one of the key challenges from the school year and opportunities for the future is bolstering our learning support program. The report casually mentions that, but it doesn’t give enough context. I remember that I actually have three spreadsheets that contain additional data that I would like to include in the report.

Student Academic Performance Trends

Teacher & Parent Requests & Referrals

Current Learning Support Program Capacity & Utilization

I decide to upload these additional documents to Gemini with a prompt to please analyze the spreadsheets and incorporate the data into the report.

This is great! However, I realized that we have a lot more data that I would like to cite regarding the opportunity to bolster learning support capacity for next school year. Please analyze this data and integrate it into the final report.

Gemini complies and shares a new draft with this data integrated into the report.

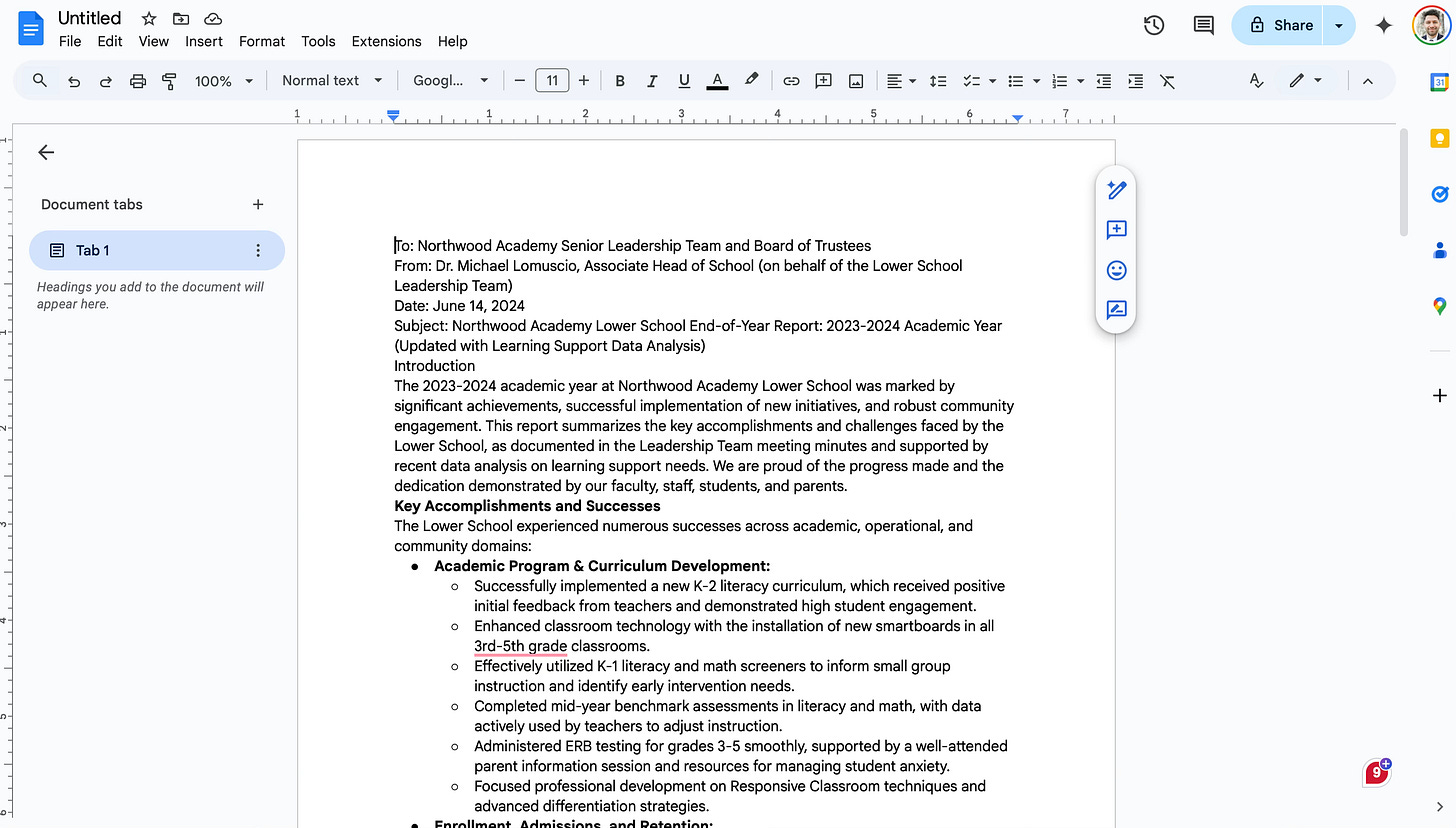

This new version is wonderful. My team and I can definitely use it as a jumping off point. Now I want to share it with my team in a Google Doc so that everyone can make comments and edits.

I scroll to the bottom of the output.

Click on the Share button

And then click on “Export to Docs” (see the image below)

Now that I have a draft that I co-created with AI, I want to use ACT to help me transparently cite my use of AI. Within the same Google Gemini chat that I have been using to create the draft document, I paste the special ACT prompt that I shared above directly into the chat.

Google Gemini 2.5 Pro produces the following output. It is a complete AI Collaboration Trace (ACT) and details my use of AI in a way that is nuanced and transparent. A nice benefit is that it also allows others to repeat my process if they would like to, since they have the exact recipe that I used in my collaboration with AI. I will now take this ACT, copy it, and paste it at the end of my drafted Google Doc so that everyone who reads the report can see how AI was used to co-create the document.

This work was developed with AI as a thought partner. The following AI Collaboration Trace (ACT) details the iterative process, outlining user inputs, AI configurations, AI contributions, and the purpose of each significant interaction cycle.

--- AI COLLABORATION TRACE (ACT) ---

1. OVERALL INTERACTION SUMMARY

- AI Platform(s) Utilized: Google Gemini

- Primary Model(s) Engaged: Gemini 2.5 Pro

- Date(s) of Interaction: June 4

- Session Identifier(s): https://g.co/gemini/share/##########

2. INTERACTION CYCLES

Interaction Cycle #1:

- Purpose of Cycle: Drafting - To generate an end-of-year report for a Lower School Leadership Team, summarizing wins and challenges based on provided meeting minutes.

- User Input (U):

- Prompt: "Attached are the meeting minutes from our Lower School Leadership Team for this school year. Please help me turn them into an end of year report for the senior leadership team and board. It should highlight the wins and the challenges that we faced this school year."

- Attached Data/Context: "NORTHWOOD ACADEMY LOWER SCHOOL.pdf" (14 pages of meeting minutes from August 2023 - June 2024).

- AI Configuration (AI-C):

- AI Model/Type: General Conversational Model with Document Analysis and Summarization capabilities.

- Key Settings/Tools Leveraged: Document analysis (reading the PDF), text summarization, and report generation. User persona and background information were also considered for tailoring the output.

- AI Output (AI-O):

- Nature & Summary: The AI generated a formatted end-of-year report. It included a memo header, an introduction, sections for "Key Accomplishments and Successes (Wins)" and "Challenges and Areas for Growth," a "Looking Ahead" section, and a conclusion. The content was synthesized from the provided meeting minutes, categorizing various events and discussion points.

- Key Contribution/Excerpt: "The 2023-2024 academic year at Northwood Academy Lower School was marked by significant achievements, successful implementation of new initiatives, and robust community engagement. This report summarizes the key accomplishments and challenges faced by the Lower School, as documented in the Leadership Team meeting minutes." (This excerpt sets the professional tone and summarizes the report's intent effectively.)

- Reflexive Element & Workflow Progression (R): The AI's output provided a comprehensive first draft of the report. The user likely reviewed this draft for accuracy and completeness, and its structure would have served as a strong foundation. The user found it "great!" but realized more specific data was needed for a particular section, leading directly to the next cycle.

Interaction Cycle #2:

- Purpose of Cycle: Refining & Data Integration - To analyze new quantitative data related to learning support capacity and integrate these findings into the previously drafted end-of-year report to bolster a specific section.

- User Input (U):

- Prompt: "This is great! However, I realized that we have a lot more data that I would like to cite regarding the opportunity to bolster learning support capacity for next school year. Please analyze this data and integrate it into the final report."

- Attached Data/Context:

- "Current Learning Support Program Capacity & Utilization .xlsx - Sheet3.csv"

- "Student Academic Performance Trends.xlsx - Sheet1.csv"

- "Teacher & Parent Requests & Referrals.xlsx - Sheet2.csv"

- The previously AI-generated end-of-year report from Cycle #1.

- AI Configuration (AI-C):

- AI Model/Type: General Conversational Model with Data Analysis (CSV interpretation) and Text Integration capabilities.

- Key Settings/Tools Leveraged: Code execution environment (Python with pandas library) for reading and inspecting CSV data, data analysis, and text editing/integration to revise the existing report.

- AI Output (AI-O):

- Nature & Summary: The AI first used its code interpreter to load and inspect the three CSV files, summarizing key insights from each regarding learning support needs (capacity vs. demand, academic trends showing need, teacher/parent demand). It then integrated these data-driven findings primarily into the "Challenges and Areas for Growth" section of the report, specifically creating a subsection for "Bolstering Learning Support Capacity to Meet Growing Needs," and also updated the "Looking Ahead" section. The revised report was more robust and evidence-based in that area.

- Key Contribution/Excerpt: "These data points collectively demonstrate a clear and pressing need to enhance our learning support services, staffing, and resources to adequately meet the diverse needs of our students." (This sentence effectively summarizes the implications of the newly integrated data). The detailed bullet points citing specific data from each new file under the "Bolstering Learning Support Capacity..." section were also key.

- Reflexive Element & Workflow Progression (R): This refined report with integrated data likely provided the user with a much stronger, evidence-backed document for their intended audience. The user's next step would be to review this updated report, possibly make minor edits, and then finalize it for submission or further use in their project.

3. FINAL OUTPUT DERIVATION STATEMENT

The AI Collaboration Trace above illustrates key junctures where AI partnership facilitated brainstorming, critically evaluated preliminary ideas, assisted in structuring the argument, and helped refine language. While the core concepts and final articulation are my own, the AI's contributions were instrumental in accelerating the research process, broadening the scope of considered ideas, and enhancing the clarity of the final work.

-- End of AI Collaboration Trace (ACT) --The Value for Educators, Learners, and Creators

Adopting something like the ACT offers several benefits:

Promotes Transparency and Honesty: It provides a clear and auditable trail of AI involvement.

Encourages Metacognition: The process of creating an ACT forces us to think critically about how we're using AI, what prompts are effective, and how AI output is shaping our work. This is invaluable for learning.

Highlights Human Contribution: By detailing the user inputs and reflexive elements, ACT underscores the critical thinking, creativity, and direction provided by the human partner. It helps answer the "what did you do?" question.

Fosters Responsible AI Use: It moves us towards a more deliberate and accountable way of integrating AI into our workflows.

Provides a Richer Context: For readers or evaluators, an ACT offers deeper insight into the creation process than a simple disclaimer ever could.

Moving Forward Thoughtfully

The landscape of AI is evolving at an astonishing pace, and our practices for integrating and acknowledging these tools need to evolve too. The AI Collaboration Trace is one attempt to foster a more transparent, reflective, and academically honest approach to human-AI partnership.

It's not about adding burdensome rules; it's about developing better habits of mind and practice. I encourage you to try out the ACT framework, use the generator tool, and experiment with the AI-assistance prompt. See if it helps you better articulate the fascinating and increasingly complex ways you're partnering with AI in your own work.

I'd love to hear your thoughts and experiences if you do. How can we, as a community of educators and learners, continue to refine these practices together?